About Me

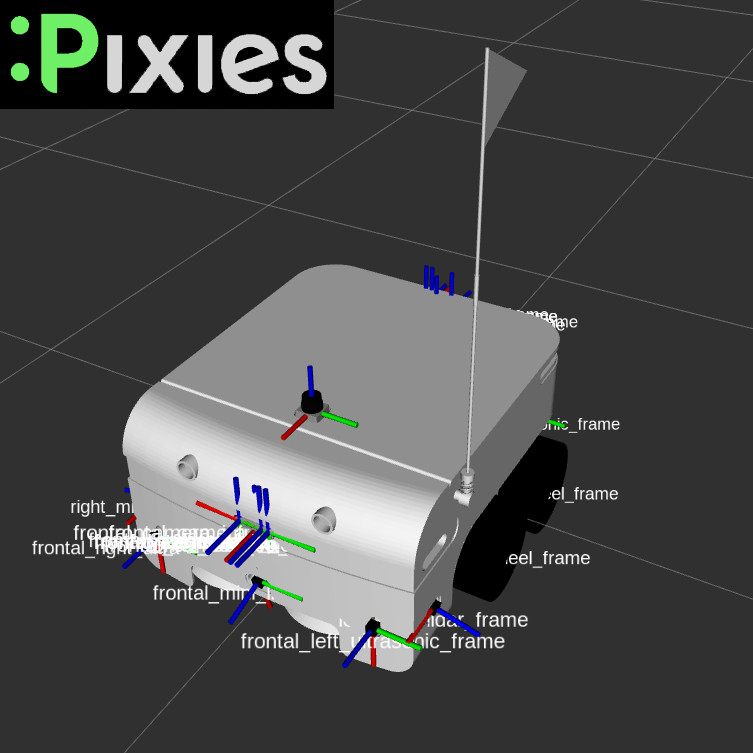

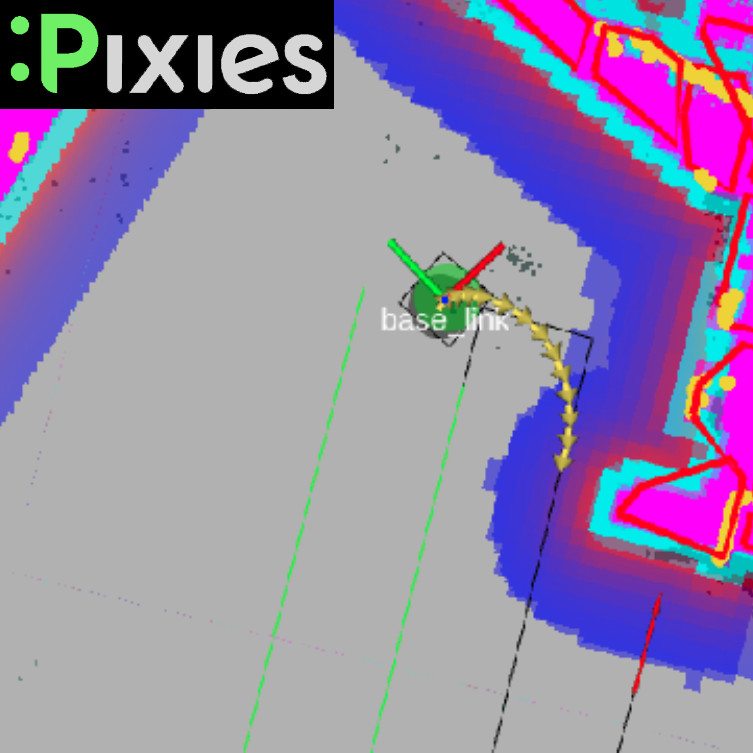

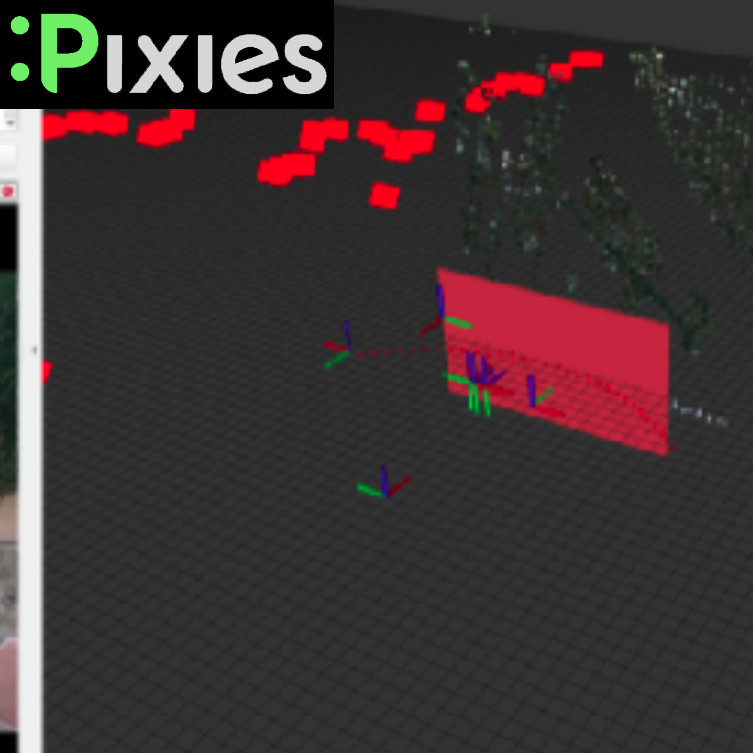

Hey there, I'm Michele Ciciolla, born and bred in Rome with a lifelong passion for technology. After finishing up high school, I jumped straight into studying Computer Science Engineering at Università Roma Tre. That's where I got my hands dirty with algorithms, data structures, and automation, all the good stuff. During my undergrad, I got hooked on mobile robotics navigation, and that interest led me to focus my bachelor's thesis on the topic. It was such a blast that I decided to keep the momentum going and signed up for a master's program in Artificial Intelligence and Robotics at Università La Sapienza. There, I got into some seriously cool stuff like robot kinematics, motion control, computer vision, and deep learning.

I'm all about diving into research and development, especially when it comes to software engineering for robotics, artificial intelligence, automotive tech, and even the space/defense sector. Let's make some waves in tech!